Consensus algorithms at scale: Part 2 - Rules of consensus

By Sugu Sougoumarane |

If you’re still catching up, you can find links to each article in the series at the bottom of this article.

The Rules of Consensus

YouTube Scale

When we were running Vitess at YouTube, there were tens of thousands of nodes serving very high QPS. The scale out was in all dimensions: some of the shards had over fifty replicas. The topology was complicated with these nodes being spread out across multiple data centers. To make this work, we had to strike a balance between latency, availability and durability. To meet these requirements, we used to perform regular failovers that were mostly automated. I am happy to say we never lost data due to hardware failure.

The first time we heard about Paxos, it sounded magical: an algorithm that will dynamically elect a leader to ensure that all requests are fulfilled without errors, divergence, or data loss. The Vitess failovers used to take a few seconds, and we wanted to avoid serving errors during this period.

We started to evaluate Paxos to see if it could be retrofitted into Vitess. We quickly found that our quorum sizes would have been too big for a majority based algorithm. Also, the MySQL replication mechanism didn’t look anything like the durability mechanism Paxos was describing. Our only option was to do a gap analysis between the two systems. In a way, this is what led to the discovery of FlexPaxos: an additional knob that allows you to achieve a more meaningful performance vs. safety trade-off.

There were other differences: our failover algorithms did not look anything like what Paxos recommends. Studying how the two systems differed led to the discovery of a common set of principles that any leader-based system can follow to guarantee correctness and safety. We will cover these rules in this blog post.

Why Consensus

We are focused on using Consensus to address durability at scale. It is possible that there are other use cases, but we are not concerned about those.

No system can give you absolute durability guarantees; there is always the possibility of a catastrophic failure that is bigger than anticipated. You must decide the level of failure tolerance you want. This depends on the reliability of the resources and the criticality of the data. In other words, durability requirements are use-case dependent.

To accommodate all possible use cases, we will treat durability as an abstract requirement. The algorithms must be agnostic of these requirements, and should be able to accommodate arbitrarily complex rules. This changes the way we approach the problem, and we will go through this exercise in the following sections.

Single Value Behavior

Paraphrasing the definition from Paxos: the primary guarantee we want from a consensus system is that it must not forget a value it has acknowledged as accepted. Once a value is accepted, all other values must be rejected.

When asked to accept a single value, the operation would have one of the three following outcomes:

- Accepted: the value was successfully accepted.

- Rejected: the value was rejected.

- Failed: the operation did not succeed, but may succeed later.

If the first request was Accepted, then any subsequent attempts to write a different value will be Rejected.

If the first request was Rejected, it likely means that a previous value was accepted before our “first” attempt. In this case, subsequent requests will also be Rejected.

If the first request Failed and a second request is made, the system can choose to finalize either of the requests as Accepted, but not both. Since the second request can also Fail, we need to restate this more generally: the system can choose to Accept any previously requested values as final. Pathological failure modes can cause the system to remain in the Failed state indefinitely. But it is generally expected to converge eventually.

In Practice

It is not very practical for a system to just accept a single value. Instead, let us see what should happen if we changed the specifications to a system that accepts a series of values, which is what storage systems typically do.

If a system first accepts a value v1, and later receives a request for v2, it must record v2 as having happened after v1. The more significant property is the following: If the request for v1 failed because the system was not able to meet the durability requirements, then a request for v2 requires the system to make a final decision on whether v1 should be completed or rejected. If completed, it will record v2 after v1. Otherwise, v1 is discarded and v2 will be the only accepted value.

Raft understood this, which is why they describe their system as a way to achieve consistent log replication.

In our case, we will think in terms of requests rather than values, which can be any operation a storage system may have to perform. It could be a transaction, or setting the value for a key, or any other atomic operation.

Let us restate: The purpose of a consensus system is to accept a series of requests in a strict order and keep them consistent across multiple nodes.

Single Leader

To limit complexity and scope, we are going to stick to single leader designs. The popular implementations that I know use the single leader approach. There is research on leaderless and multi-leader algorithms. But I am not very familiar with them.

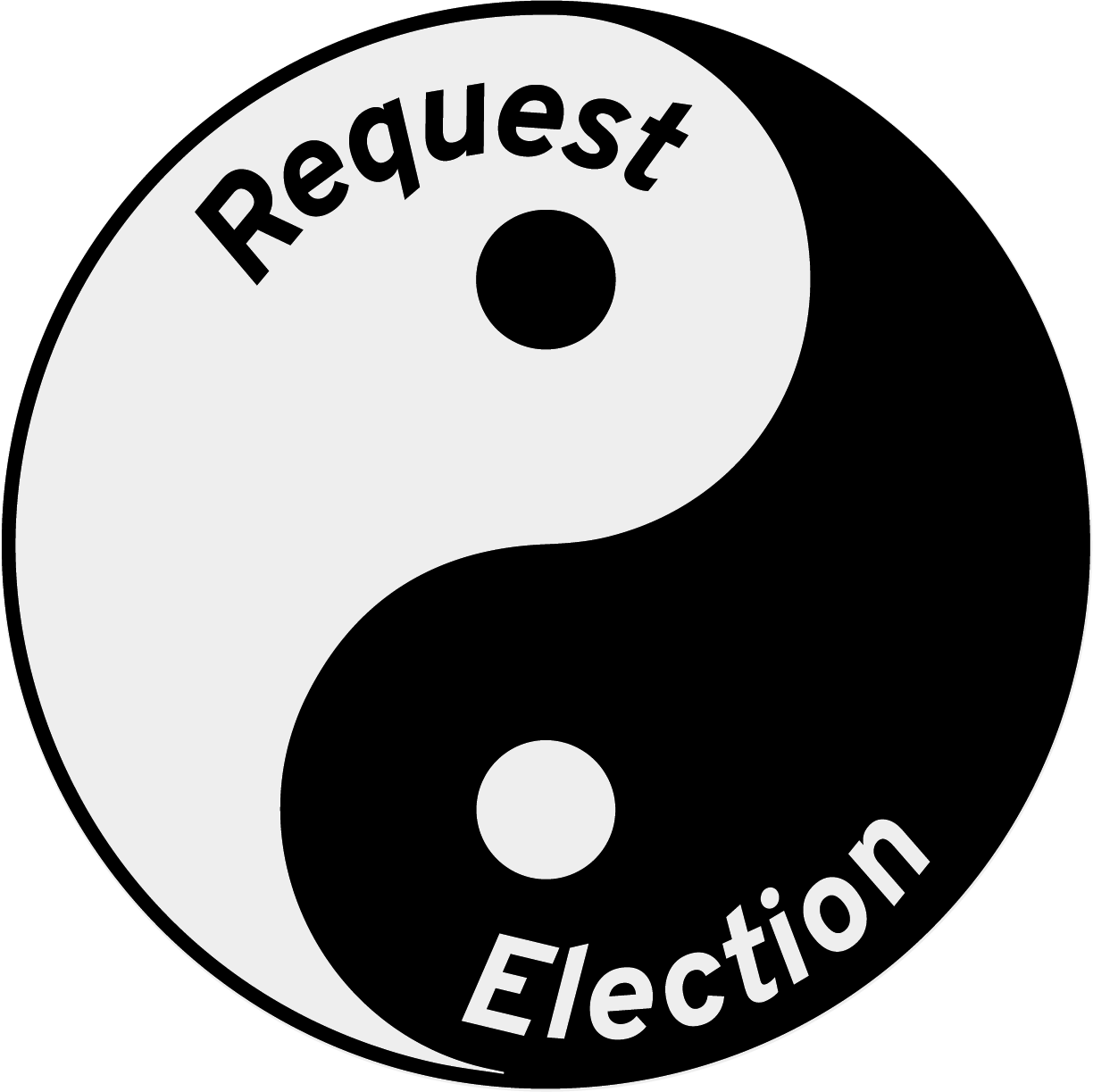

A Single Leader consensus system is a combination of two workflows that cooperate with each other:

- A leader accepts requests and makes them durable.

- A new leader can be elected to resume requests without divergence or loss of data.

Paxos and Raft also use the single leader approach.

The Rules

Now that we have spent enough time building up the premise, it is time to codify the rules governing a consensus system:.

- A Leader’s job is to fulfill requests by satisfying the mandated durability requirements.

- To elect a new Leader, the following actions must be performed:

- Terminate the previous Leadership, if any.

- Recruit the necessary nodes for the new Leader.

- Propagate previously completed requests to satisfy the new Leader’s durability requirements.

- Forward Progress: If a Leader election fails, a subsequent re-attempt should have a path to success, where a new Leader can be elected without breaking the durability and safety guarantees.

- Race: If concurrent attempts are being made to elect a Leader, at most one Leader must prevail.

Rules 3 & 4 are actually implicit in rule number 2. But these properties are so important that it’s worth making them explicit.

These rules are intentionally generic to allow for creativity in achieving these goals. In fact, being more specific than this will exclude some valid implementations. However, we will show multiple ways to satisfy these rules. We will also validate the existing popular algorithms against these new set of rules.

You will notice the following differences:

- No mention of a majority quorum.

- No mention of intersection of nodes.

- No proposal numbers.

This is where we deviate from traditional systems because we believe these are not strictly required for a consensus system to operate correctly. For example, you can build a consensus system with fifty nodes, but still only have a quorum size of two. There is no need for these quorums to intersect across leaders. As for proposal numbers, very few understand why they are even needed. It is better to discuss what we are trying to achieve, and then introduce proposal numbers as one option, and maybe consider alternatives that don’t involve proposal numbers. We will drill down into each of these properties and explore trade-offs between multiple approaches.

In the next post, we will cover some practical use cases that this generalized set of rules allows us to cover. We will also drill deeper into the meaning and significance of these rules.

Read the full Consensus Algorithms series

- Consensus Algorithms at Scale: Part 1 — Introduction

- You just read: Consensus Algorithms at Scale: Part 2 — Rules of consensus

- Next up: Consensus Algorithms at Scale: Part 3 — Use cases

- Consensus Algorithms at Scale: Part 4 — Establishment and revocation

- Consensus Algorithms at Scale: Part 5 — Handling races

- Consensus Algorithms at Scale: Part 6 — Completing requests

- Consensus Algorithms at Scale: Part 7 — Propagating requests

- Consensus Algorithms at Scale: Part 8 — Closing thoughts